Sometimes you just play around with a technology without using it in professional environment. You learn some very useful stuff but that’s it. You might think it’s super easy to use until you’re going to use it in real professional environment. This is how I learned Elasticsearch the hard way.

Multi-tenancy

At moment of writing I’m working at a SaaS company called Bynder and like

every SaaS company we need a multi-tenancy setup. When this project was started we had

an initial setup where we had one index per client. What we simply did was some-demo-index-<uuid-here>.

This setup worked quite well until clients started to complain about flaky behaviour.

When checking out our Sentry logs we found out that we ran into sharding issues.

Initially we weren’t really addressing the issue and tried to suppress it.

After all maybe it was a bit unlucky that we came into situation.

No sharding

To understand why we ended up in this situation we had to learn that indices are not for free. Every index creates an x amount shards, shards can be seen as a self-contained search engine. These shards consume memory as their main resource. Creating many indices leads to many shards EVEN if you configure “number_of_shards" to 1! Also having many shards containing almost no data is super inefficient. To set a base line: It’s perfectly fine a single shard contains somewhere between 20GB and 50GB of data.

How to do multi-tenancy with ES

Elasticsearch indices support the concept of aliasing. With this concept you can have a single index and filter out a selection with an alias. Filtering can be done on properties of the document but also on time based values. For a good multi-tenancy setup, it’s recommendable to add the account_id / customer_id / client_id or whatever id you use and make it a part of the document. By doing that use can use an alias to filter out the right documents.

example below:

index_name = "my_demo_index"

index_alias_name = "{}_{}".format(index_name, account_id)

create_body = {

"filter": {

"term": {

"account_id": account_id

}

}

}

result = await client_containers.elastic_search.indices.put_alias(

index=index_name,

name=index_alias_name,

body=create_body,

request_timeout=self.ES_REQUEST_TIMEOUT

)

If you want search only in documents from account_id 3DD7DA18-44EE-437F-98EE-58C378A79A91 in

my_demo_index then select index alias my_demo_index_3DD7DA18-44EE-437F-98EE-58C378A79A91

and internally elasticsearch will add a term to the search query that filters out all the

correct documents

Removing aliases

Removing aliases will not lead to removal of documents. This is important to know if you need to comply to for example GDPR standards. It would also be a bit weird right? Data from aliases could also be filtered on time like:

Give me all updated documents from the last 30 minutes

If you need to remove all data a tenant then you will need to remove the alias and the documents.

Disaster recovery

Another problem that’s often overlooked is disaster recovery. If your elasticsearch cluster somehow get destroyed then you often want to recover asap. The Bulk API will save you a massive amount of HTTP calls which are extremely slow when you need to do a fast recovery.

The bulk API is almost as fast as a regular insert action but the bulk API can easily handle 10k documents at the time. To be fair Elasticsearch probably need a little more time to process the 10k of documents. You can even crank up the performance by disabling replica’s on initial load and more. Which steps you can take during a disaster recovery really depends on your situation. I brought recovery times back from 2+ hours to a few minutes and I’ll bet that there is more to gain. One final note that I’ll would like to add on this topic: Test it over and over and over again. Get those insights about your recovery time. Your company might have agreements with clients about this topic, and you definitely want to be sure that your money where your mouth is.

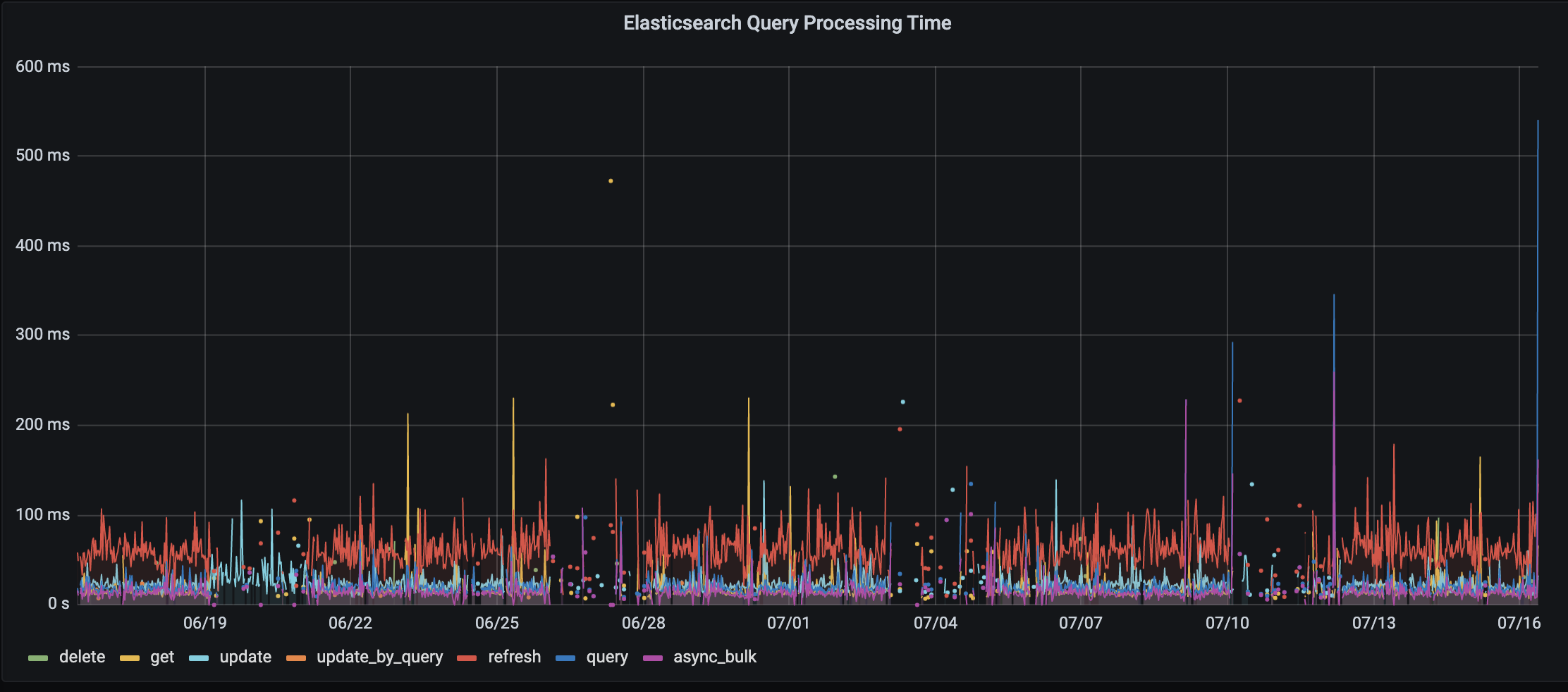

Visibility

The last and final step is to create visibility, health checks and alerts. At Bynder we created Grafana boards which shows the performance metrics of the whole application including the performance with Elasticsearch. We also have metrics about the cluster state, information about the index and shards. Without this visibility it’s nearly impossible to fine tune and improve your application.